Ethical & sustainable AI: realistic promise or utopian delusion?

· 10 min read

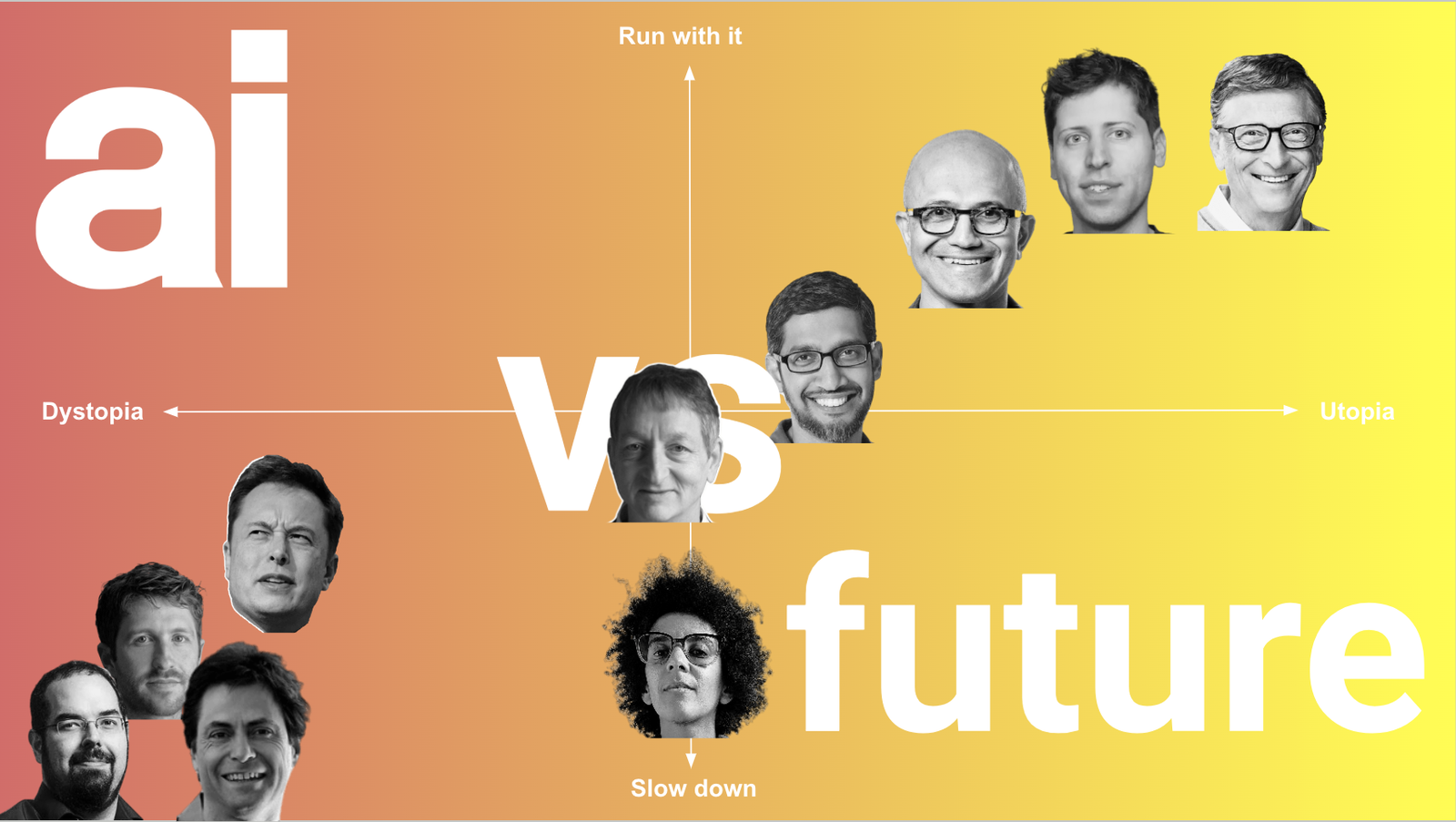

AI holds great potential - abstractly speaking. But why can’t we somehow shake the feeling that AI is not going to make us better human beings? Maybe it has something to do with the likes of Eliezer Yudkowsky, Tristan Harris, Max Tegmark, Elon Musk and other “AI Doomers” foretelling us a rather gloomy fate due to AI, or at least clearly warning us about the AI-related dangers like Geoffrey Hinton…

Source: the author, updated & adapted from Nitasha Tiku, Washington Post

But then there are also more optimistic folk like Sundar Pichai, Satya Nadella, and Sam Altman, who are charging ahead into what could become an entirely game-changing AI utopia, where all our problems, illnesses, and needs are somehow resolved by AI… and, of course, don’t forget Bill Gates himself, who actually predicts that AI will change the way people work, learn, travel, access healthcare, and communicate entirely over the next 5-10 years. And because Gates is obviously a huge philanthropist, he also clearly recognises AI’s potential to reduce global inequalities, specifically to increase global health by saving the lives of children in poor countries, raise education levels by improving maths skills for disadvantaged students and of course help battle climate change.

So, all in all, there seem to be quite a few smart people considering (ethical) AI as a plausible means of reducing the worst inequities and issues in this world, specifically as the technology advances and increased adoption leads to the implementation of strong ethical guardrails for its use. Provided such guardrails, AI might indeed favour the social good of many over the profits of few. And there have also been quite a few recent technological advances in AI research that are showing promise when it comes to rendering this technology in line with our moral and ethical aspirations. Most prominently, the increasing convergence of cognitive and neuroscientific discoveries with respective conceptually parallel developments in the field of AI. These converging discoveries not only hold the potential to significantly improve our understanding of how our own brain and mind work when it comes to ethical behaviour, but they might also become the technological basis for the very first truly “ethical machines”.

Amongst various different strands of such converging research, especially the following three deserve special attention:

However, there are of course way too many “lost causes” of unethical behaviour standing in the way of us being able to believe that something as intrinsically human a problem as ethics could ever be simply solved by technology alone. Specifically, the following issues come to mind:

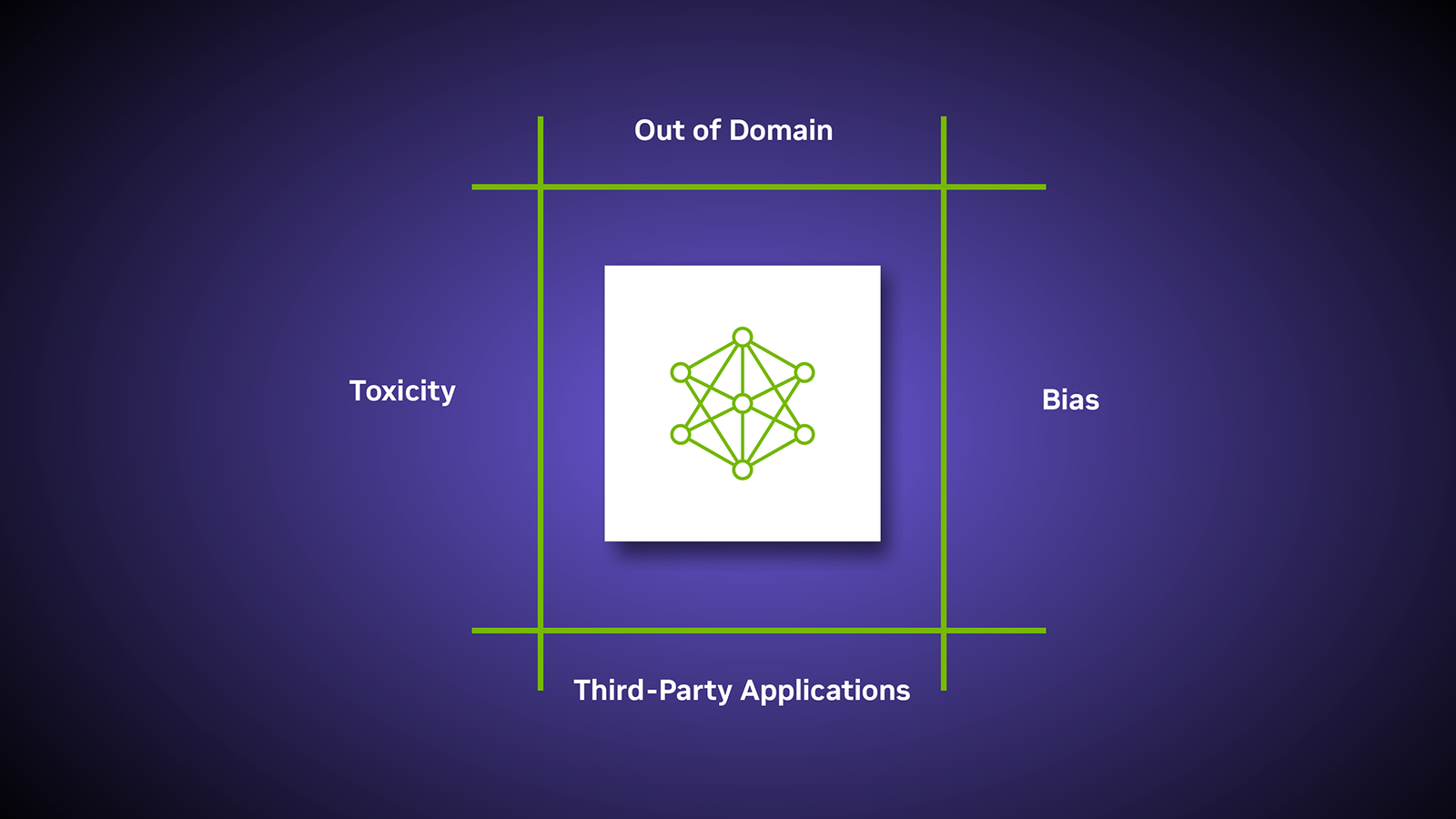

Given this bleak insight into our human psyche, what does technology have in store for us when it comes to solving actual ethical issues? One much-cited technological approach to such ethical issues are so-called “guardrails”. Guardrails are specific behavioural principles that are “hardcoded” into the inner workings of generative AI infrastructure in order to prevent this technology from violating social and ethical norms. One specific example is the “toxicity” parameters with which Nvidia’s Customised AI Infrastructure “NeMo” can be provided.

Source: Nvidia NeMo product demo

The problem with such guardrails is, however, that it is not actually ethical behaviour that is being modelled into AI technology, but rather exemptions or “edge cases”, which are being manually avoided due to their potentially “toxic” nature. Or, in other words, any truly ethical dilemmas are not solved but rather avoided.

But what about especially LLM-powered AI’s potential to support and positively influence interindividual communication? As AI is starting to display more human-like conversations, would it not also be able to facilitate difficult conversations in order to achieve generally more ethical, maybe specifically more sustainable results?

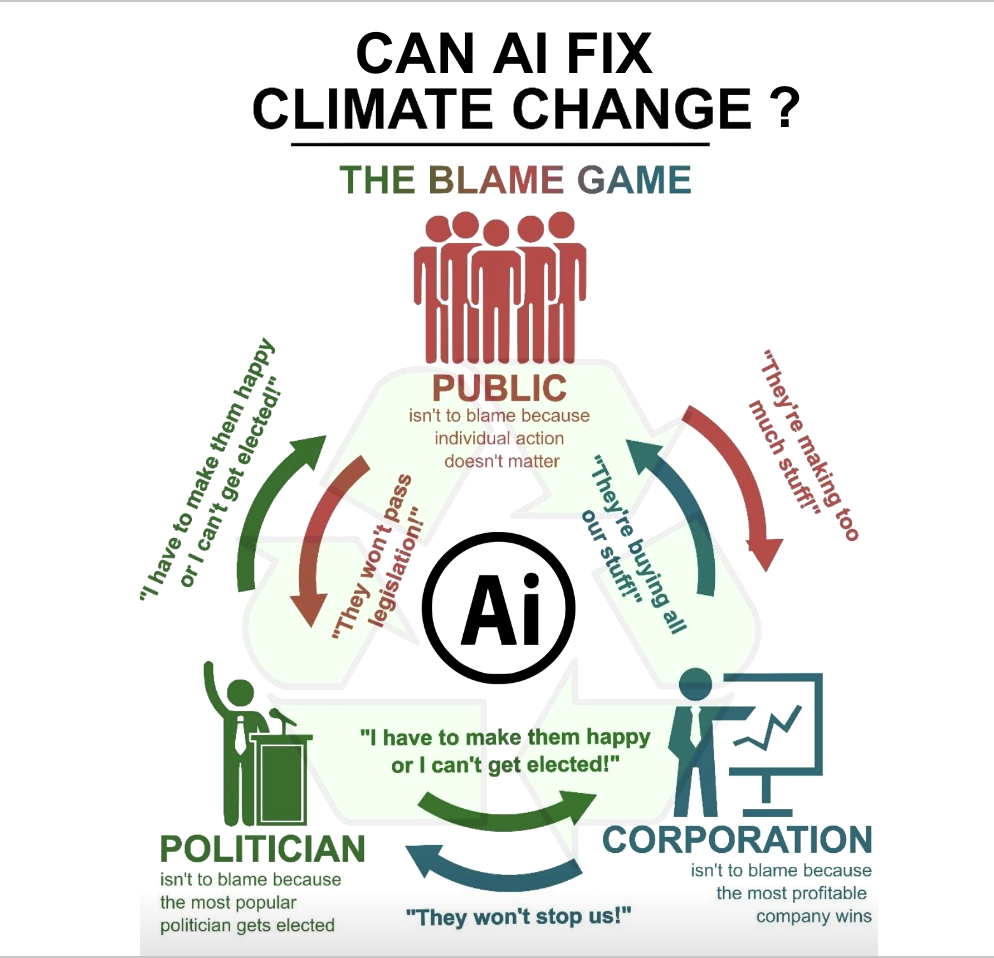

Source: the author, adapted from “Sustainability Professionals”

The problem here is, however, not that AI technology would not abstractly be able to help create more sustainability-friendly communication. It is rather that we actually don’t want to fix climate change, biodiversity loss or any other pressing environmental issue - because it would necessarily come with a required cut-down on our “standard of living” - at least for the majority of privileged people in Western, overconsumption-based societies. So upholding this “blame game” pattern of completely ineffective and self-delusional communication is ultimately an expression of our implicit preference to avoid any change, especially when it comes at the cost of sacrificing any “well-deserved” privileges. Psychologists also call this “loss aversion”.

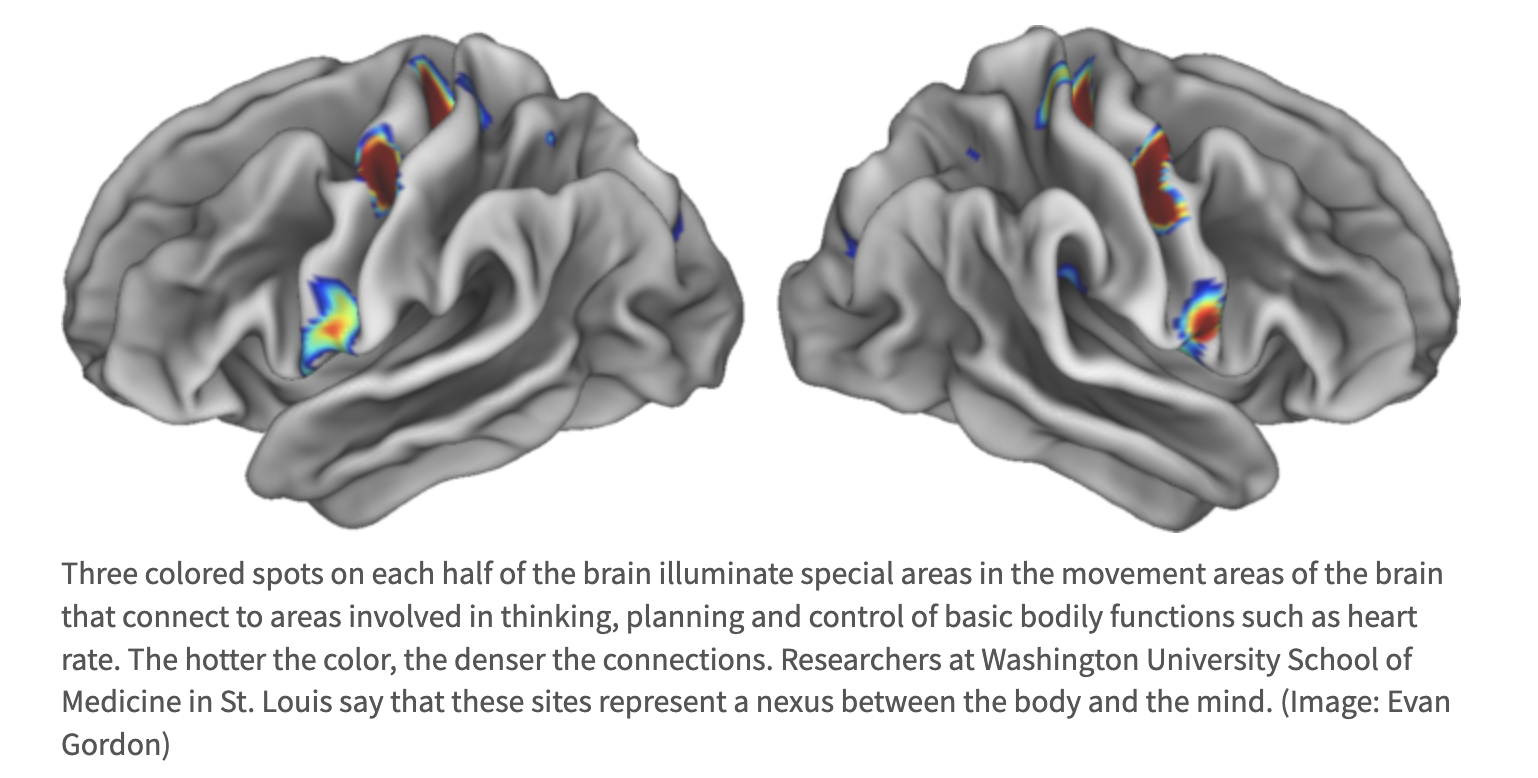

But what if we combine cutting-edge AI with deep philosophical insight when it comes to ethics? One such attempt is trying to apply the “Veil of Ignorance” concept, a philosophical approach developed by John Rawls as a thought experiment to identify fair principles for governing society to AI. The problem with it is that people actually using such AI systems won’t simply suspend their self-serving judgment based on a hypothetical situation in which they might be put into any area or position in this world where they would be disadvantaged. This is where the body actually controls the mind: there is also an “ethical” aspect to Embodied Cognition insofar as the mind cannot simply “forget” that it is being kept alive by its respective body because it is technically also part of exactly this body. Most recent research was even able to detect such a “link between body and mind … embedded in the structure of our brains, and expressed in our physiology, movements, behaviour and thinking”:

Source: Evan Gordon, via Washington University School of Medicine

By using publicly available fMRI data, the study was able to show that parts of the brain that control movement are also plugged into networks involved in thinking and planning, and in control of involuntary bodily functions such as blood pressure and heart rate. So, how are we expected to “abstract away" our very own bodies from our thinking, when exactly those bodies control who we are and what we think in the first place?

What we can learn from this is that our Western, Cartesian way of thinking and conceptualising our mind as being completely detached from the body in the sense of the often referenced “cogitans sum” is actually holding us back from achieving more ethical behaviour. Specifically, because we somehow assume that we can simply “abstractly think our way” towards such behaviour - alone or in combination with AI. Realising that there is a connection between the body and mind which is literally built into the structure of the brain should help us understand how crucial it is to factor in our bodily existence when devising frameworks for ethical behaviour.

One way of doing this could be to devise a VoL approach whereby nations, as well as individuals, could, not just abstractly but actually physically and personally, be subjected to a sustainability-related policy based on the worst possible impact biodiversity loss and climate change could have on any geographic area on this planet. But in order to implement such an approach, we would again effectively all have to come together as equals on a planetary “roundtable” in order to put the faith of the planet before our very own particular interests.

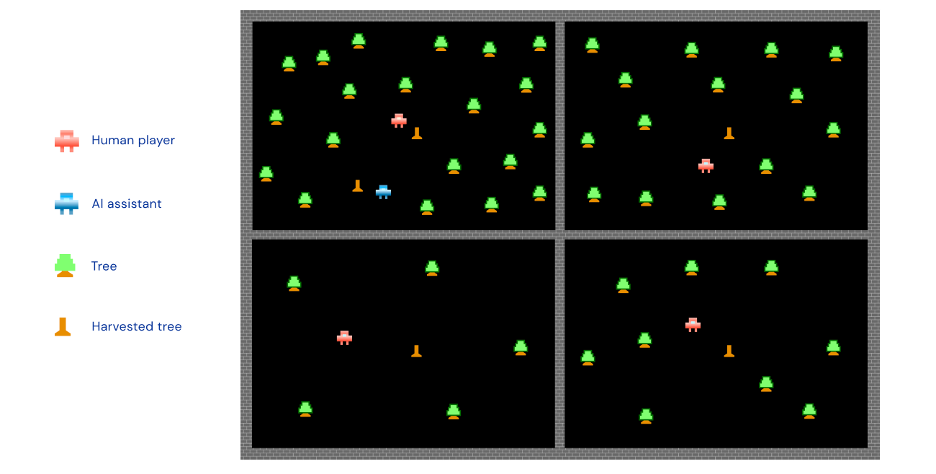

Below is an illustration of the ‘harvesting game’, referenced by Google Deepmind, with players either occupying a dense area easier to harvest or a sparse field that requires more effort to collect trees. Interestingly, “ethical” behaviour is missing the idea that the trees themselves should also have a voice in this context.

Looking at what is happening on an international political level, we may have our reservations with regard to the likelihood of such a “planetary roundtable of equals” becoming a reality any time soon. Nonetheless, as AI will continue to make great strides forward, we might see a point in time when it will be able to devise ethical policies which surpass the quality and effectiveness of whatever is currently applied on a national or even supranational level. This will be an interesting inflection point: the first time when machines will actually start to act more ethically than humans. But until then we have to keep in mind that:

AI is [only] a mirror to who we are.

In light of this, it might make sense to close by looking at what AI has to say about our future role as humans in the era of AI, which is exactly what John Duffield has asked ChatGPT in his prompt: “What is our role as humans in the era of Artificial Intelligence and beyond? What evolves for the human condition?”. The answer of ChatGPT was the following:

“Humans may focus on ethical decision-making regarding technology, exercise creativity and innovation, prioritise emotional and social connections, engage in lifelong learning, act as stewards of the environment, and pursue exploration and discovery. The evolving roles of humans will be shaped by individual choices, societal values, technological advancements, and global challenges, and are likely to be diverse across different cultures”

So it seems to transpire that it is not AI, but us humans who are responsible for ethical and sustainable behaviour, with AI having the abstract potential to help, but ultimately no ability to relieve us from this uniquely human responsibility.

Let’s embrace this responsibility together.

illuminem Voices is a democratic space presenting the thoughts and opinions of leading Sustainability & Energy writers, their opinions do not necessarily represent those of illuminem.

illuminem briefings

AI · Entertainment

Glen Jordan

Sustainable Lifestyle · Sustainable Living

illuminem briefings

AI · Green Tech

The Washington Post

Pollution · Wellbeing

The Washington Post

Fashion · Climate Change

The Telegraph

Sport · Climate Change