A.I. our global wunderkind: what are the risks?

· 7 min read

Artificial Intelligence (AI) is booming, with Nvidia now worth $2.2 Trillion. Nvidia is the leading supplier of Graphic Processing Units (GPUs), which are critical components for running large language models. They maintain a ~80% market share, and their H100 GPU costs around US$40,000. A.I. is the next wave that will enable global productivity gains and an explosion of possibilities not seen since the Internet boom of the late nineties. It is, therefore, no wonder how enthusiastic the world is about AI and its potential uses. Like everyone familiar with technology, I am watching its developments closely.

I used the 1st to 4th causal effect analysis to assess AI’s risks as it grows worldwide. These risks require attention if AI is to continue its growth trajectory. For the first causal effect, I’ve looked at the relationship between A.I. and energy use by evaluating GPU’s energy use in the two leading LLMs, Gemini and ChatGPT.

To calculate the number of LLM GPUs used to support 100 Million Monthly Users, I’ve used the following data points:

Factors influencing GPU requirements:

LLM architecture: Different LLMs have varying complexities that affect processing demands.

User requests: The type and complexity of user requests heavily influence processing needs: A model answering simple questions requires less power than one generating creative text formats.

Response time: Faster response times necessitate more GPUs for parallel processing.

Making an educated guess:

Here's a simplified model with placeholder values to get a rough idea:

Monthly users: 100 million

Requests per user per month: We assume an average of 10 requests per month, which looks reasonable.

Processing time per request: This depends on the complexity of the request. Let's assume an average of 0.006 seconds, the fastest and most conservative metric I could find.

Calculate total requests per month:

Total requests per month = Monthly Users * Requests per User per Month = 100 million * 10 requests/month = 1 billion requests/month

Calculate total processing time per month:

Total processing time (seconds) = Total requests per month * Processing time per request = 1 billion requests/month * 0.006 seconds/request = 6 million seconds/month

Placeholder for GPU processing power:

This depends on the specific GPU model and architecture. Let's assume a GPU can process 100 requests per second.

Estimate number of GPUs needed (assuming full utilization):

Number of GPUs = Total processing time (seconds) / GPU processing power (requests/second) = (6 million seconds/month) / (100 requests/second) = 60,000 GPUs (This is a very rough estimate)

Important to remember:

This is a simplified model and does not account for all variables.

The actual number of GPUs can be significantly higher or lower depending on the factors mentioned earlier.

Optimizations like batching (reduced speed) can improve efficiency, reducing the required number of GPUs.

Additional considerations:

Cost: Running a large number of GPUs can be expensive due to electricity consumption and hardware costs.

Scalability: The infrastructure needs to be scalable to accommodate future growth in user base and request volume.

To calculate the kWh/GPU, I’ve assumed an Nvidia H100 GPU with a Total Power Consumption of 700W.

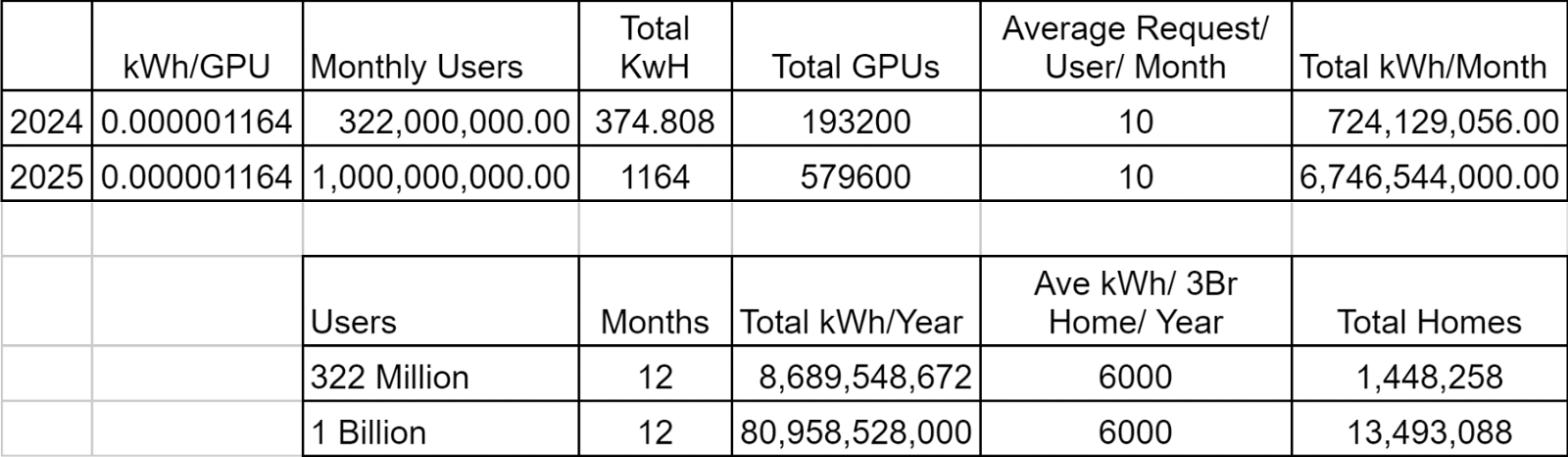

By the summer of 2025, LLMs are forecast to have around 1 billion monthly users, compared to 322 million in March 2024. This growth is realistic if you compare it to the growth of Facebook (Meta) from 500 million to 1 Billion between 2010 and 2012, when Internet use was not as widespread as today, and there was no 4G and 5G.

As per the chart above, the result is that the annual energy consumption of the two main LLMs equals the annual consumption of 13.5 million 3-bedroom homes by 2025. AI is used more pervasively than just in LLMs. Nvidia is expected to produce 1.5 Million H100 GPUs in 2024.

For those of you who want to know the size of an H100 GPU, it is Height: 11.1 cm and width: 3.48cm, and 26.8 cm in length. These GPUs are built into GPU servers, which form a High-Performance Computing Cluster or an AI training rig. These servers and rigs, as does the Data Center itself, add to power consumption. There are no numbers to assess actual kWh usage due to architecture variability, but it's safe to assume that the energy consumption is far greater than just the GPUs. There is no data on how much energy is used to produce these GPUs, Architecture, and data center infrastructure.

Causal Effect: There will be an increased demand for energy in a world where the energy supply is already strained. The increase in wind, solar, hydro, biofuel, and nuclear power will largely depend on meeting that demand as fossil fuel extraction is peaking and becoming increasingly inefficient. This is why solar energy is now cheaper than fossil fuels.

Connecting LLM data centers to the grid requires upgrading the grid and deploying Renewable Energy, which requires enormous extractive material resources buried deep inside the earth. Biofuel demand will increase, requiring more rainforests to be replaced by palm oil plantations. Increased mining output from low to middle-income countries with poor environmental standards will increase air, land, and water pollution. LLM data centers need water for cooling, which is currently the same water we use for agriculture, animal, and human use, adding to the freshwater crisis. LLMs also require maintenance so that spares will be required. We already have an Air, Land, and Water pollution crisis.

AI will increase productivity in almost all industries and will likely replace lower-skilled, higher-cost functions that an AI can do much faster and cheaper. We’ve seen this many times before when other technologies were deployed, so this should not surprise you. With increased productivity comes wonderful prosperity except we need to factor in the Jevons Paradox. It explains how an increase in efficiency and productivity leads to higher consumption. This negates the positive effects of efficiency as more goods can now be produced. This increases our planetary overshoot, biodiversity loss, and the risk of ecological collapse. In addition, we’ve exceeded 6 out of 9 planetary boundaries. We are likely to risk more climate and natural disasters in the future.

The growth of AI is global, and all states will use AI for military defense, economic growth, and social well-being purposes. For the most part, states function as benevolent authorities on behalf of their citizens. They are supposed to, and many states believe this. This is true for all countries; whether you agree with their politics and governance is irrelevant. Geo-political tensions are at risk of escalating. Given the externality risks above, one should evaluate AI’s influence over society as the power of AI, both in terms of possibilities and risks, is exponentially far greater than what the Internet and social media are today. Social Media has done an excellent job at limbic hijacking, which has benefited consumer goods companies enormously. The risk to society of AI is brain hacking, which would leave AI as the sole intelligence source because we’ve voluntarily outsourced it.

For AI to benefit our planet, we need to understand the risks and try to mitigate them as much as possible. The unchecked proliferation of AI will lead to increased risks:

The exponential growth of AI will lead to more energy demand, slowing down the Fossil Fuel phase out.

Exponential growth in natural resources to build the infrastructure required to support AI’s internal and external effects. Finite resources constrain AI growth.

Planetary Boundaries and Overshoot will increase the risks of ecological collapse, which would first impact global food supply.

Voluntary brain hacking leads to mental health problems, the fastest-growing disease in the world, and is likely to become the number 1 disease of the 21st century.

I will leave you with the following:

Evidence and reason can often estimate risk reasonably well, but only a value judgment can determine acceptable risk. It is also good to remember that prolonging risks is profitable for a few people.

I recommend you evaluate your AI use decisions based on the Morals and Values you hold dear regarding your health and that of our home planet Earth.

AI is enormously powerful and can benefit education, health, and decision-making, but ignoring its downside will risk diminishing its potential value to civilization and the planet.

illuminem Voices is a democratic space presenting the thoughts and opinions of leading Sustainability & Energy writers, their opinions do not necessarily represent those of illuminem.

Kasper Benjamin Reimer Bjørkskov

Fashion · Sustainable Business

illuminem

Entertainment · Sustainable Lifestyle

illuminem

Corporate Sustainability · Sustainable Business

The Economist

AI · Labor Rights

UNEP FI

Sustainable Finance · illuminemX

The Guardian

Sustainable Finance · Natural Gas